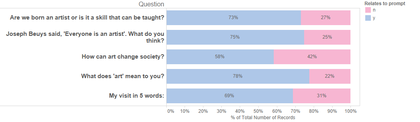

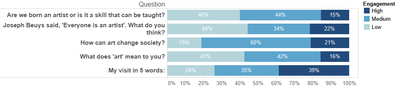

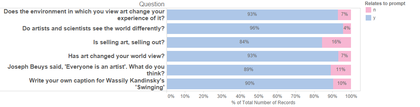

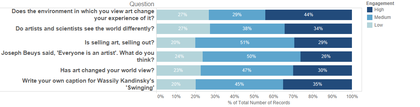

The analysis was applied to the responses data from April 2014 as some additions and rewording of the questions took place during the first months of the project. The following graphs show results of the coding.

³¢±ð±¹±ð±ôÌý³Ù·É´Ç:

Bloomberg Interactives, Level 2 ºÚÁÏÉç, Analysis of recorded responses to questions

©°Õ²¹³Ù±ð

Engagement level with each question at interactives on Level 2 ºÚÁÏÉç

©°Õ²¹³Ù±ð

³¢±ð±¹±ð±ôÌý´Ú´Ç³Ü°ù:

A graph depicting the the level of resposes recoded to questions in regards to visitors agreement

©°Õ²¹³Ù±ð

A graph depicting the level of engagement to questions on Level 4, tate Modern, Interactives.

The analysis helped the Interpretation team to answer the evaluation questions, to identify which questions worked better and compare the results of the interactive in each of the levels.

After the initial phase of analysis, the Interpretation team used the results to assess the impact of each question, making some changes in response to patterns that we observed. For example, a question originally posed on the Level four screens was, ‘Does architecture affect your view of art?’

We saw that this was receiving a roughly average proportion of on-topic responses (47%) but quite a high proportion of low-engagement answers (mostly ‘yes’ or ‘no’). We kept the topic, but rephrased the prompt to ‘Does the environment in which you view art change your experience of it?’ By using the phrase ‘your experience’ we hoped to elicit more in-depth responses and accounts of visitors’ reactions. Meanwhile the question ‘When does written text become visual art?’ was dropped altogether as we saw that a lower than average number of responses were on-topic (40%). On Level two, we changed the question, ‘Have you seen an artwork today that matches your mood? What and why?’. We saw a low proportion of on-topic responses to this prompt (11% to an average of 33% for Level two kiosks on the whole), which supported our conjecture that the question was being asked too ‘early’ in a visit, potentially when many visitors are just starting to explore the gallery. Instead we asked the broader ‘What does ‘art’ mean to you?’ Discussing how to respond to the initial results raised questions of what evidence we were looking for to rate a prompt question’s success.

The analysis also helped to assess the impact of different interactive interfaces and settings for people to participate and write their comments. In assessing the success of prompts we applied slightly different criteria looking at the responses submitted on Viewpoints kiosks on different levels: we had a higher ‘tolerance’ for questions which generated less engaged answers on the Level two screens than those on Level four (echoing the aim of the whole suite of Bloomberg Connects interactives to foster increased engagement over the course of a visit). However, the process of coding the responses showed the challenges and the limitations of the approach.

When coding the responses we look at the final output but we do not know what has happened before that response was entered in the system. Behind a short and simple response in terms of our score there may be a long discussion between friends or someone who has read many responses from other visitors or an overseas tourist whose mother tongue is not English. People who come to a museum have different knowledge of the subject so for example an artwork caption written by someone with little or general knowledge of art may be coded lower than a caption written by a specialist in art. Someone who has written, ‘it has lots of circles and shapes’ in response to the prompt to write a caption for Wassily Kandinsky’s Swinging may be a young person with general knowledge of art who has spent a long time looking and thinking about the work. But their response would be coded as ‘less engaged’ than an off-the-cuff remark from someone with more specialist knowledge.

If all the responses were unrelated or a ‘yes’ or ‘no’ type of answer, this would indicate a failure for the project so the coding was useful to get an understanding of the usage of the interactives. However, when it comes to evaluating how this participatory interactive activity impacts on the visitor experience in the gallery and how engaged the responses are, there are some limitations with this type of analysis.